(open)ZFS Lite: LVM2 + VDO

When you want next generation file storage you either go with ZFS or BTRFS on Linux. The first one being tricky for not being GPL software so you depend on dkms modules and third party repo’s for most distro’s. Personally I use ALmaLinux 9 which is a RHEL 9 clone, so I will focus on that in this post.

Luckily there is a third option these days: VDO. “VDO is a device-mapper target that provides inline block-level de-duplication, compression, and thin provisioning capabilities for primary storage. VDO is managed through LVM and can be integrated into any existing storage stack.” originally a small closed source solution but in production use. At some point RedHat bought it, open sourced it and started to promote it in there knowledge base. That is when I first heard about it. Had zfs up&running for my large storage array but not for my main OS partitions. Sounded like a good solution:

- I had no intention to go the brave route of running zfs on my root partition nor the need. LVM with snapshots and easy resizing options was enough for my primary single SSD storage,

- I do use flatpak and podman containers intensively so data de-duplication at the very least sounded tempting.

- Transparant compression is always nice also.

In the beginning VDO was a self contained software stack but later editions were integrated into LVM. Basically you have a kernel module and a set of user space utilities to manage it: https://github.com/dm-vdo/

Until recently all of this was third party, but starting with Linux kernel 6.9 it is included in the mainline kernel. That is actually good news, not yet another dkms module and also not perse some RedHat pet project.

A little warning: all of this comes at a price, transparant compression and especially data de-duplication has performance and resource implications! Although I must say the ML implementation today performs adequate , no not as fast as a modern NVMe SSD can do but still fast enough … I will show you some pictures later 🙂

To talk performance: VDO comes with a ton of configurable’s, all of which are stored to the LVM storage directly. This is thoroughly described in the main lvm config file so if you want to give it a try I suggest you look there for more information. Storing the config directly in the storage array has it pro’s and cons: it will survive a reboot or even a reinstall of your OS, but on the downside can’t be done on a running volume. The latter can be challenging if you use VDO on your root partition like I do. You would need a boot medium capable a using the vdo stack. A recent Fedora boot stick can do this, but the in kernel 6.9+ version is not 100% compatible with the previous non mainline kernel version. So beware of that!

Lastly if you want to use VDO on your root partition you must include the vdo kernel module in your dracut initramdisk. Also I strongly recommend to also include the userspace utils, so if you are dropped to an emerency shell you at least can fix some things right there and then. Saved me at least once 😀

So without further delay, lets see what needs to be done.

First you need to decide if you go the main line Linux or standard RHEL/Fedora route. In case of the former, a kernel compile with option:

CONFIG_DM_VDO=m

Will suffice. In case of the latter you either add an additional repo or use provided packages for the dkms module. In both cases you need the user land tooling.

You start with a logical volume with enough free space for your new partition. I will assume the default a compression and data de-duplication, so you actually assign more space then you really have. This can be easily be twice the amount but beware that if you ever over reach the real physical capacity of your vdo volume and are unable to extend it you are in serious trouble. Read: recover from the backups you surely made before 😉 You are warned!

The defaults are quite conservative so set them right form the start, now this is no exact science but I based them on the hardware I have at hand. A CPU with 16 cores and 32 threads and 128GB memory:

# lvcreate --type vdo --name <volume name> --size <your size in G> --virtualsize <a lrger size, I used +30% based on my experience with my day to day storage load> --vdosettings 'ack_threads=8 bio_threads=16 cpu_threads=32 hash_zone_threads=8 logical_threads=8 physical_threads=8 max_discard=1024' <volume group>One note of caution: before I upped the defaults, the periodic SSD trim job for RHEL put my system in an extended unusable state! Sometimes a little tweaking can make all the difference. These days fstrim runs once a week like it is supposed to and I don’t even notice it 🙂

If this is your root partition you need to tell dracut about it, make a file: /etc/dracut.conf.d/vdo.conf With this content:

force_drivers+=" dm-vdo "

install_items+=" vdoformat vdostats vdodmeventd vdoregenerategeometry vdoforcerebuild vdoformat vdolistmetadata vdoreadonly vdosetuuid vdodumpconfig vdodumpmetadata vdodumpblockmap vdodebugmetadata "And run dracut -p -f --kver=<kernel version> afterwards.

That should do it! After some use you can check if everything is working as expected with:

❯ vdostats

Device 1k-blocks Used Available Use% Space saving%

homes-vpool0-vpool 26214400 10101688 16112712 39% 9%

homes-vpool1-vpool 73400320 55443856 17956464 76% 29%

neo-vpool0-vpool 1939865600 616301552 1323564048 32% 31%Unfortunately there is no easy way of actually getting de-duplication stats, all I could find online was a method for the old non LVM version that meant gathering statistics for many hours and generating a report afterwards. But if you want to test: just copy a large file to a different name in the same volume and watch what happens 🙂

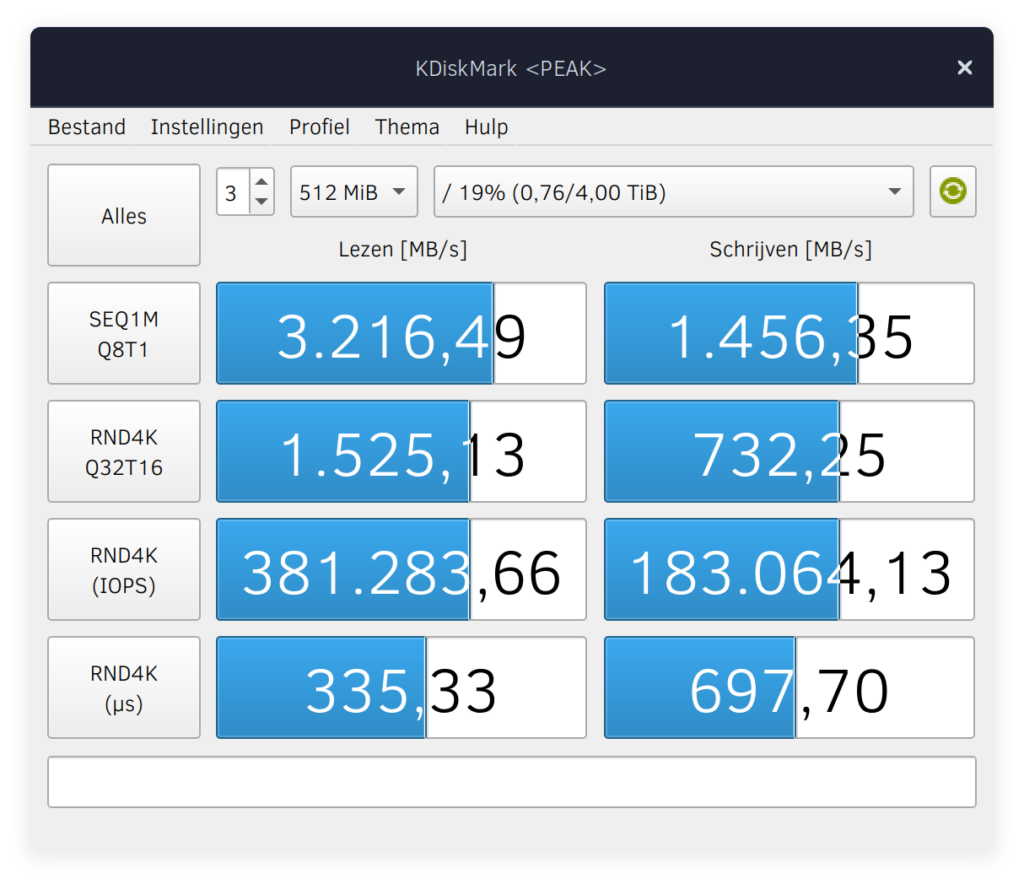

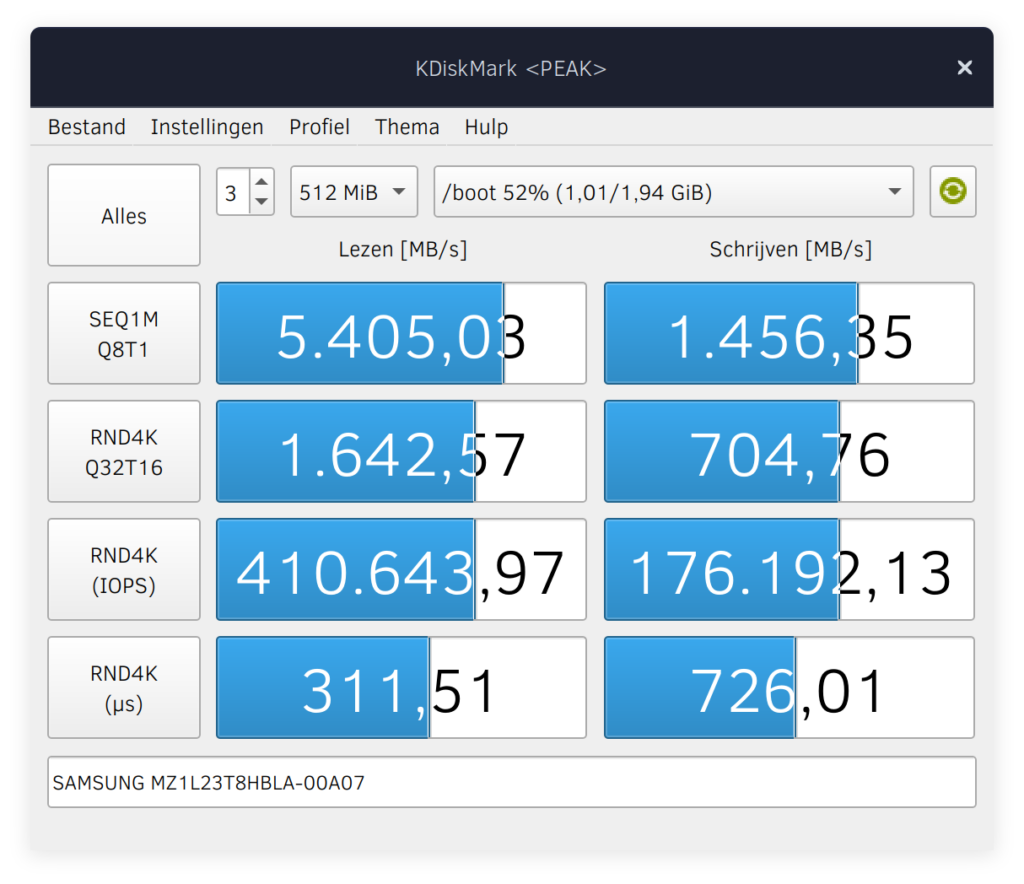

So to end this post: as said before VDO comes at a performance penalty. I’m no performance benchmarking guru, but know how to operate KDiskmark, so here we go. First one is on my LVM VDO xfs root partition, second on the xfs boot partition on the same SSD:

Works for me TM!! IMHO third and fourth row are the ones that really matter, not bad, not bad at all!